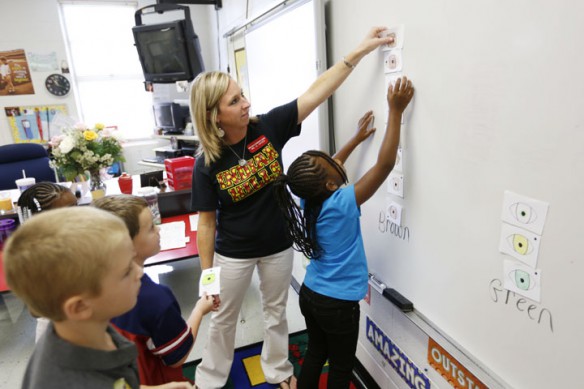

Amber Hayes teaches 2nd-grade students how to graph information at Indian Hills Elementary School (Christian County). Hayes likes how PGES helps with goal setting and fostering conversations with administrators. Photo by Amy Wallot, Aug. 16, 2013

By Matthew Tungate

matthew.tungate@education.ky.gov

Teachers and principals who participated in last year’s field test of the teacher Professional Growth and Effectiveness System (PGES) overwhelmingly support its use and four of five measures that comprise it, according to results of spring focus groups and surveys released last month. Teachers and principals also felt the Kentucky Department of Education (KDE) adequately trained and supported them in implementing the PGES.

To ensure effective educators in every classroom and school, KDE is developing the Professional Growth and Effectiveness System (PGES). The statewide system for teachers is designed to be fair and equitable and uses multiple measures of effectiveness including student growth, professional growth and self-reflection, observation, and student voice in the evaluation process. Last year more than 50 districts participated in a field test of the system, and this year teachers from every district are participating in a statewide pilot.

A steering committee made up of teachers and other education stakeholders is reviewing data collected from the field test and statewide pilot to make recommendations to the Kentucky Board of Education about how measures will be used in the summative evaluation process when it goes statewide to every school during the 2014-15 school year.

KDE research analyst Bart Liguori said more than 80 percent of respondents feel comfortable using the PGES for determining their level of effectiveness.

“A lot of people are saying that this is some of the best stuff that Kentucky’s ever done in terms of teacher effectiveness and this is something that is really revolutionary and it’s very good to be on the cutting edge, and people really appreciate that opportunity,” he said.

More than 80 percent of participants also said they feel observation, student growth, reflective practice and professional growth plans are appropriate to support the PGES, Liguori said.

Amber Hayes, a 2nd-grade mathematics teacher at Indian Hills Elementary School (Christian County), participated in the teacher PGES as an observer and as a teacher being observed last year. She feels much the same as most of the teachers who participated in the survey.

“PGES allows teachers to set student goals as well as teacher goals, and meeting with my administrator for post-observation conference was a great opportunity for self-reflection because it was teacher- and administration-based conversation on each evaluation. Together we decided on what needed to be changed, but the majority of the meeting is led by the teacher,” Hayes said.

Hayes said she liked the PGES observations better than traditional observations because the new system is evidence-based and the teacher and principal come up with ratings together.

“It gives the teacher good feedback of what is actually viewed in the classroom, not the opinions of the principal,” she said. “I think it leads to productive conversations and valid changes when needed.”

Amanda Ellis, principal at Emma B. Ward Elementary (Anderson County), and two teachers participated in the PGES field test. She will have five teachers participating this year. Ellis also is the only principal on the teacher PGES steering committee, and has come around on the new effectiveness system over the last three years.

She initially was nervous there was going to be too much paperwork and too many measures for teachers and principals to keep up with.

“I used to see them as a list, the litany of things we had to collect. But when you really dig into it and you start to work through it, you start to see how things interrelate,” Ellis said. “When you have those pieces, that gives you the tools to be very intentional on growing professionally and knowing where you have been and where you want to go and how you’re going to get there.”

Ellis recently introduce the PGES to teachers that won’t be participating in the state pilot. She said they all immediately began reflecting on how they would be rated in the new system.

“It’s a very different set of expectations. It’s very clear, it’s rubric-based, it’s very objective – so they like knowing what’s expected of them and what tool to use to measure that domain. It just makes it so much clearer. But it is much more rigorous,” she said.

The one measure that received less than overwhelming support was student voice, which surveys students’ perceptions of three areas relative to their classroom: content knowledge or professional learning; pedagogical skill; and relationships with students. About 57 percent of teachers and principals agreed or strongly agreed that student voice was appropriate to support the PGES, and about 45 percent said they got positive feedback from their colleagues about it.

Ellis said her staff had much the same reaction to student voice as others who responded to the survey.

Ellis said veteran teachers remember when students were surveyed following testing in the early years of KERA, and teachers were getting surveys that were inaccurate because students didn’t understand the questions.

“So they became very fearful of inaccurate student perceptions,” she said.

Teachers also were concerned that students would give them poor marks because they were mad at them, but after looking at the questions and talking about it more, Ellis said the teachers realized the questions don’t lend themselves to those kinds of answers.

“It’s very intimidating to be judged by an 8-year-old,” she said.

Yet teachers realize the benefits of student surveys, Ellis said. She said one of her teachers who participated last year was stunned when only 70 percent of her students thought she had high expectations. She said the teachers found more areas of improvement from the student feedback than from her feedback.

“Kids know,” Ellis said.

Liguori agreed, and evidence shows that, he said. According to the Measures of Effective Teaching (MET) project, student survey data has the strongest correlation to student achievement.

Liguori pointed out that teachers may have trepidation because many are not familiar with the questions and because it is new.

“As we go along, I would hope people would become more familiar with it and be able to make more informed opinions on it,” he said.

Participants in the field test also suggested weights for each of the measures. Respondents felt observation should have the greatest weight at about 40 percent, with student growth coming in second at about 26 percent, followed by professional growth planning at almost 22 percent and student voice at about 12 percent.

Liguori said Kentucky teachers, unlike those in other states, acknowledge that student growth should be a big part of their evaluation.

“Teachers in Kentucky recognize that that’s such an important part of what we’re doing in education,” he said.

The Teacher Effectiveness Steering Committee discussed the weighting of the measures at its August meeting.

Ellis said the steering committee takes comments from teachers very seriously, and she expects the opinions in the surveys and focus groups to carry serious weight.

Ellis thinks that will make a big difference in how teachers accept the PGES.

“If you treat them respectfully like the professionals that they are, and give them time to let it sink in, they’re going to run with it because they want to be better,” she said.

MORE INFO …

Bart Liguori, bart.liguori@education.ky.gov, (502) 564-4201

Leave A Comment